I'll tell you a little about the optimization technique that I recently used for the Factory Protocol and what difficulties I encountered while implementing it in Unity.

For the game, I plan to use animated production machines so that the gears spin and the manipulators do their job. But in the case of a skinned mesh (a model with a skeleton), no merging works (this is when the same model is drawn in different places but counts as 1 draw call, which helps a lot when it needs to be drawn a thousand times).

10,000 static models rendered with GPU Instancing

For GPU instancing, the models need to be static, but I still need to animate my models. The way out of this situation is to animate the models yourself, already in the video card, using a vertex shader.

For animations in the vertex shader, you just need to save the offset of each point of the model to the texture, for each frame, and then in the vertex shader, by the vertex index, take the desired offset value and apply it to the position of the vertex. There is only one small detail - the vertices do not have indices, so they must first be written into the model itself. I used the UV2 channel to record additional information about the model.

One of the first, unsuccessful attempts to implement the algorithm. On the left is the original Skinned Mesh, on the right is a static mesh with animation through the vertex shader.

The first try, of course, did not work out. The problem was that only colour values are written to the texture, limited to 4 numbers with a range from 0 to 1 (RGBA), but the offsets of the vertices can be in a much larger range and even negative. For correct normalization, I began to take the maximum displacement from the model for a specific animation and write it down along with the animation texture. As a result, each offset was normalized to the range from -1 to 1 and brought to the range from 0 to 1. This already made it possible to pack the offsets as they should look, without much loss in accuracy.

Chicken test - checking the algorithm on a model from the network. The stripe on the right - textures with saved animations.

As you can see, all models are animated at the same time. The point is that they are all displayed with one texture and at one time. In order for some of them to use a different animation, you need to draw them in a different collection, but this is not a big problem. The main problem here is precisely to make the time desynchronization - if you specify different materials for each object in the collection, then the collection will be divided by the number of objects and you won't be able to dream about optimization anymore. The way out of the situation is to create a texture in which the displacement by animation will be recorded for each model in the collection, by its number.

From this point on, an unpleasant problem arises. In order to determine which model number in the collection, an instance ID is required. But since I use URP and Shader Graph, which, as it turned out, does not know about instance ID, I had to think a lot. But not for long. It so, fortunately, coincided that a couple of days before I ran into this issue, Unity released a new beta version, in which the instance ID was added to the shader graph.

In general, after getting my hands on the IID, I decided to try painting each model in the collection in its own colour, ranging from blue to yellow. And he brought a collection of 1000 models, the first was supposed to be blue, the last yellow. But the following happened.

By a strange coincidence, the 1000 index was reset three times, accommodating a maximum of 454 values. After spending an extra day on clarifying this incident (it was not easy, given the lack of documentation), the following became clear - the collection data buffer is 64 kilobytes, and the data for 1 object in the collection is 144 bytes (2 matrices, an index, and something else). With some simple calculations, we get 65536/144 = 455 (counting 454 from zero), here it is. It turns out that in one collection I can actually display no more than 454 objects in order to correctly track their IID. Well, we limit one collection to 400 objects, slightly change the target colour and look at the result:

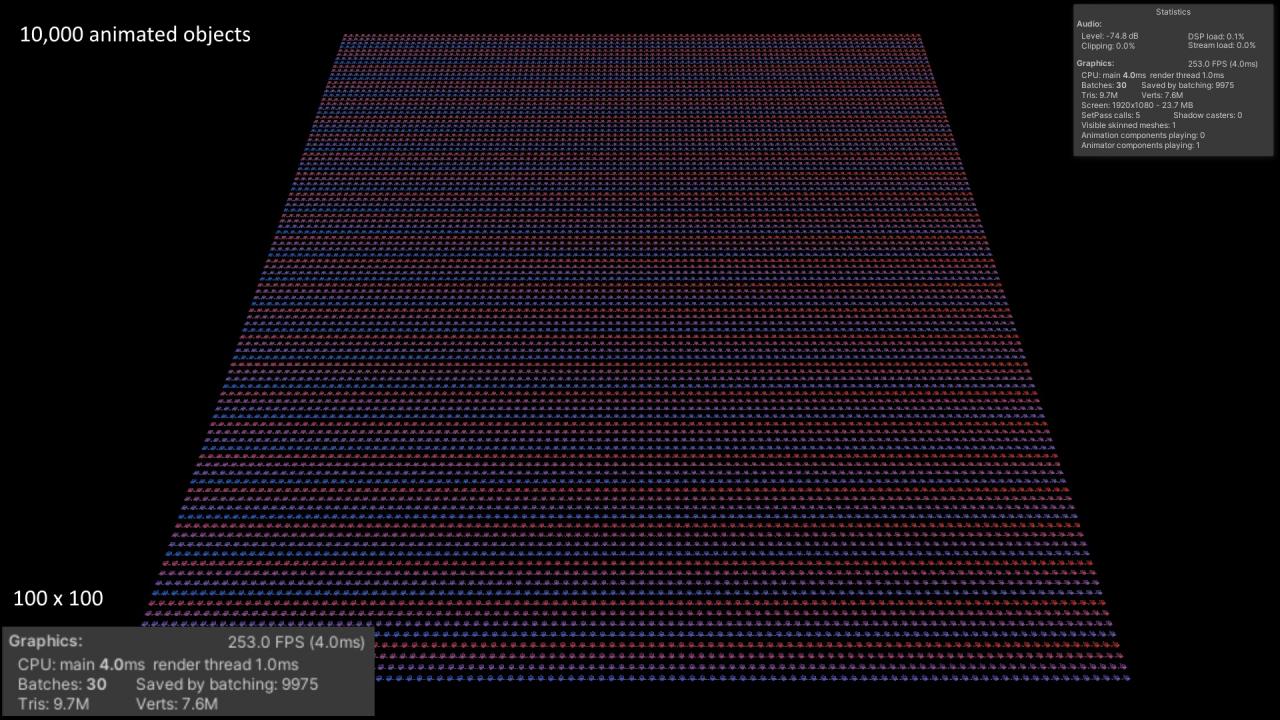

10,000 animated objects in groups of 400 models

Great, now I can identify each model in each group and easily take the parameter from the animation indent texture. And due to the fact that each group is called a separate call, you can pass a separate texture with indents and a separate animation to it. To do this, you already need your own renderer/batcher (combiner) to render all models in the required states. I'll tell you about the batcher next time.

Result

Current progress with GPU animations - 10,000 animated objects with individual states - 250 FPS, rendering takes only 1 ms. The bottleneck of this process now is the CPU, I'm trying to figure out what exactly is the matter and whether it can be accelerated in order to draw 20,000-40,000 thousand objects.